Table of Contents

Introduction

As a digital marketer, one of the fundamental tasks is to ensure that your website is easily accessible by search engines. One of the most critical components of this process is the XML sitemap. XML sitemaps act as a roadmap for search engines, guiding them through the structure of your site. If properly utilized, they can help improve your site’s SEO performance, fix indexing issues, and even prevent unnecessary errors from arising. But mastering XML sitemaps requires more than just creating them. It involves submitting sitemaps correctly, understanding the sitemap index, fixing errors that arise, and managing robots exclusion protocols. In this post, I will guide you through all these aspects, including how to leverage Google Search Console to monitor and optimize your sitemaps for the best SEO results.

What is an XML Sitemap?

An XML sitemap is a file that lists the pages of your website to tell search engines about the organization and structure of your content. It serves as a way for search engines to discover your web pages, especially those that are harder to reach through normal crawling. Without an XML sitemap, a search engine may miss some of your most important pages.

Why Are XML Sitemaps Important?

XML sitemaps offer a number of benefits:

- Improved Crawlability: Helps search engines find all your pages, especially if your website has complex or deep navigation.

- Faster Indexing: By submitting an XML sitemap to search engines, you can ensure that pages are indexed more quickly.

- Error Tracking: You can identify crawling issues and resolve them faster.

Let’s dive deeper into how you can maximize the effectiveness of XML sitemaps through submission, indexing, error fixing, and robots exclusion.

Submitting Sitemaps to Google Search Console

How to Submit Your Sitemap

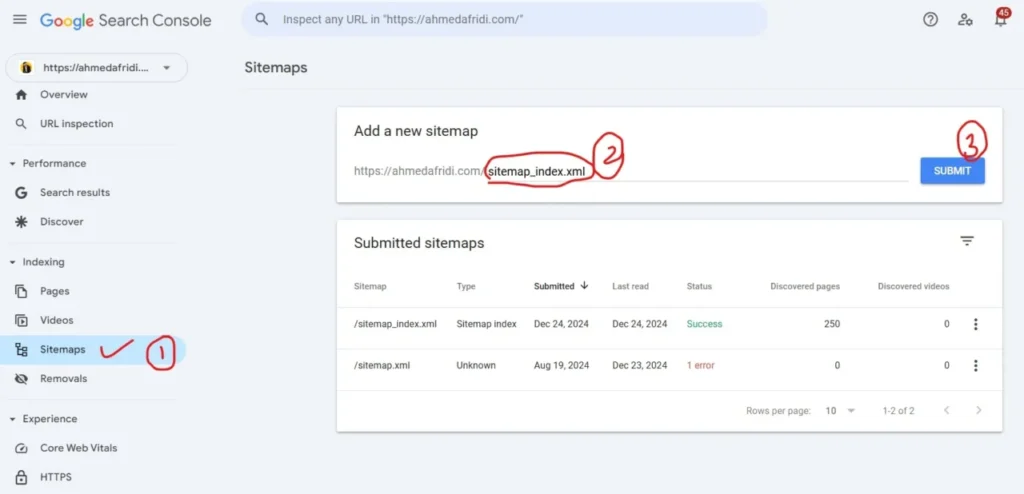

Once you’ve created your XML sitemap, the next step is submitting sitemaps to search engines. The best practice is to submit your sitemap to Google Search Console (GSC). Here’s how:

- Log in to Google Search Console: If you haven’t already set up GSC for your website, check out my Google Search Console Overview and Setting Up Google Search Console guides.

- Go to the Sitemaps Section: In the GSC dashboard, click on the ‘Sitemaps’ option under the ‘Index’ section.

- Enter Your Sitemap URL: In the ‘Add a new sitemap’ section, enter the URL of your sitemap. If it’s located in the root directory, your URL will look like this: www.yoursite.com/sitemap.xml.

- Click Submit: After you’ve entered your sitemap URL, click ‘Submit.’ Google will now begin the process of crawling the URLs within your sitemap.

For a more comprehensive guide on submitting sitemaps, you can check the Google Search Console help page.

Why Submit Your Sitemap?

Submitting your sitemap ensures that Google and other search engines are aware of all the pages on your site. This is particularly crucial for new websites or those with complex structures. Furthermore, GSC will show you the status of your submitted sitemaps, including any errors or issues, so you can quickly act on them.

Sitemap Index: A Powerful Tool for Managing Large Websites

For websites with a large number of pages, creating a sitemap index is a smart approach. A sitemap index is essentially a sitemap that lists multiple sitemaps. Rather than having one massive sitemap, which could be overwhelming and impractical, the sitemap index organizes everything neatly into manageable files.

When Should You Use a Sitemap Index?

You should consider using a sitemap index if:

- Your site has over 50,000 URLs (the limit for a single XML sitemap).

- Your website has multiple types of content (blogs, products, images, etc.) that need to be grouped.

- You want to have better control and organization over your website’s crawl structure.

For example, a sitemap index might include separate sitemaps for articles, blog posts, and products, with each of them referencing smaller sitemaps that correspond to specific sections of the site.

In Google Search Console, you can submit a sitemap index in the same way as a regular sitemap, making it easier to manage large sites effectively.

Errors in Sitemaps and How to Fix Them

While submitting sitemaps, it’s common to encounter errors in sitemaps that need immediate fix crawl errors in Google search console. These errors may prevent Google from fully indexing your content, which can hurt your SEO performance.

Common Errors in Sitemaps

- Invalid URL: This occurs if the URL in your sitemap is incorrect or broken.

- 403 or 404 Error: If Googlebot encounters a ‘403 Forbidden’ or ‘404 Not Found’ error when trying to crawl a page, it will flag it as an issue.

- Malformed Sitemap: The XML syntax is incorrect, making it unreadable for Google.

- Too Many URLs: A sitemap file cannot contain more than 50,000 URLs, so exceeding this limit will cause issues.

How to Fix Sitemap Errors

To fix errors in your sitemap, follow these steps:

- Identify the Error: In Google Search Console, go to the ‘Sitemaps’ section to check for any issues with your sitemap. Google will display the error type.

- Resolve the Issue:

- For broken URLs, make sure the links are live and return a proper response code (200 OK).

- For malformed sitemaps, ensure your sitemap adheres to the proper XML format.

- If you have too many URLs, split your sitemap into multiple files and use a sitemap index.

- Resubmit Your Sitemap: After resolving the error, resubmit your sitemap in GSC for re-crawling.

For more detailed steps on fixing crawl errors, check out my post on Fixing Crawl Errors in GSC.

Understanding the Robots Exclusion Protocol

The robots exclusion protocol (or robots.txt file) is a key component of managing your site’s crawling and indexing behavior. This file tells search engine bots which pages to crawl and which to ignore.

Why You Need a Robots Exclusion Protocol

It’s important to control which parts of your website search engines can access. For example, you might not want Google to crawl your admin pages, thank you pages, or internal search results pages. Using the robots.txt file allows you to prevent search engines from wasting resources crawling irrelevant content.

Here’s an example of a simple robots.txt file:

javascript

User-agent: *

Disallow: /admin/

Disallow: /search/

In this case, it tells all search engines (User-agent: *) not to crawl the admin and search result pages. You can also combine the robots.txt file with your XML sitemap to improve crawling efficiency.

For an in-depth guide, see the official Robots Exclusion Protocol website.

How the Robots.txt Affects Sitemaps

While the robots.txt file prevents search engines from crawling certain pages, the XML sitemap provides them with URLs they should prioritize. These two work together to ensure optimal crawling without waste. However, remember that if you block certain pages in robots.txt, Google won’t crawl them, even if they appear in your sitemap.

How to Remove a Sitemap from Google Search Console?

For removing the sitemap from GSC, the process is following. Here’s how you can do it:

- Log into Google Search Console: Login and Select the property.

- Go to the Sitemaps Section: Click on “Sitemaps”.

- Locate the Sitemap: In the ‘Sitemaps’ section, you will see a list of all the sitemaps that have been submitted for your site. Find the sitemap that you want to remove.

- Click on the Three Dots: Next to the sitemap you want to remove, click on the three vertical dots (More options).

- Delete the Sitemap: Click “Remove” to delete the sitemap from the list.

What to Do When Google Search Console Couldn’t Fetch Sitemap?

If Google Search Console couldn’t fetch your sitemap, it means that Googlebot is having trouble accessing your sitemap file. This could be due to a number of reasons, but don’t worry, you can fix it. Here’s what to do:

- Check the Sitemap URL: Ensure that the URL of the sitemap you submitted is correct. This means it should lead to an active sitemap file (e.g., www.yoursite.com/sitemap.xml). Sometimes, even a small mistake like a typo or incorrect path can cause the issue.

- Verify Server Accessibility: Check if the server hosting your sitemap is live and accessible. If your server is down, Google won’t be able to fetch your sitemap. You can test the URL in a browser or use online tools like Down For Everyone Or Just Me to verify if the server is working.

- Check for Robots.txt Restrictions: Ensure that your robots.txt file isn’t blocking Googlebot from accessing the sitemap. The robots.txt file should not have any lines like:

Disallow: /sitemap.xml

If you find such lines, remove or modify them to allow Googlebot to access your sitemap.

- Ensure Proper Sitemap Format: Double-check the format of the sitemap. If your sitemap is malformed, Google will not be able to read it. It should be properly structured XML. For verify format use an XML validator.

- Look for DNS Issues: Sometimes DNS issues can prevent Googlebot from fetching your sitemap. Verify that your domain’s DNS settings are correctly configured, especially if you’ve recently migrated to a new server or made any changes to your domain.

- Wait for Re-crawling: If all seems fine, but Google still can’t fetch the sitemap, give it some time. Sometimes it takes Google a little while to process requests. You can also manually request re-crawling by clicking “Request indexing” under the URL Inspection Tool in Google Search Console.

- Check for HTTP Errors: If your sitemap is showing an error like 404 (Not Found) or 500 (Server Error), resolve the issue on your server and make sure the sitemap is accessible. You can use tools like GTMetrix or Pingdom to check your sitemap URL for any HTTP errors.

Conclusion

Mastering XML sitemaps is a vital part of any successful SEO strategy. By understanding how to submit sitemaps, organize them with a sitemap index, fix errors, and manage the robots exclusion protocol, you can ensure that your website is crawled efficiently by search engines. Additionally, tools like Google Search Console provide valuable insights into how Googlebot is interacting with your site, making it easier to optimize for better indexing.

By fixing crawl errors, submitting sitemaps correctly, and managing robots.txt files, you are enhancing your site’s chances of being indexed and ranked favorably in search results. Always remember that XML sitemaps are more than just a technical SEO task; they’re your website’s key to visibility.

FAQs

1. What is an XML sitemap, and why do I need it?

An XML sitemap is a file that lists the pages of your website, helping search engines crawl and index them efficiently. It ensures that all important content is discovered, even pages that may be hard to find through links alone.

2. How do I submit a sitemap to Google?

You can submit your sitemap by logging into Google Search Console, navigating to the ‘Sitemaps’ section, and entering your sitemap URL. Once submitted, Google will crawl the URLs listed in the sitemap.

3. What is a sitemap index, and when should I use it?

A sitemap index is a file that lists multiple sitemaps, allowing you to organize your site’s URLs more effectively, especially for large websites.

4. What should I do if my sitemap contains errors?

Check Google Search Console for any errors, resolve them (like fixing broken URLs or correcting XML syntax), and then resubmit the sitemap.

5. What is the robots.txt file, and how does it affect my sitemap?

The robots.txt file tells search engine bots which pages to crawl and which to ignore. It works in tandem with your XML sitemap to improve crawling efficiency.