Table of Contents

It is important to know how to optimize site visibility on search engines (SE). Google Search Console Indexing is a powerful tool that allows webmasters and digital marketers to monitor and manage their site’s indexing status, identify crawl errors, and ensure that their content is effectively reaching their audience. In this blog, we will explore the various facets of Google Search Console indexing, including the URL inspection tool, checking indexing status, handling crawl errors, generating and submitting sitemaps, and creating a robots.txt file.

Understanding Google Search Console Indexing

Google provides free services of Google Search Console (GSC) to help website owners and digital marketers to monitor and maintain a website’s presence in Google Search results. One of the vital features of GSC is its indexing capabilities. Indexing is the process through which Google discovers and stores your website’s content, making it available for users in search results.

Google Search Console Overview: Features, Analytics, & Performance

Why is Indexing Important?

When your pages are indexed, they can be displayed in search results. If your pages are not indexed, they won’t appear in search results, regardless of how optimized they are. Therefore, understanding how to use GSC for effective indexing is essential for any digital marketer.

Setting Up Google Search Console: setup, verification, dashboard, & tools

URL Inspection Tool

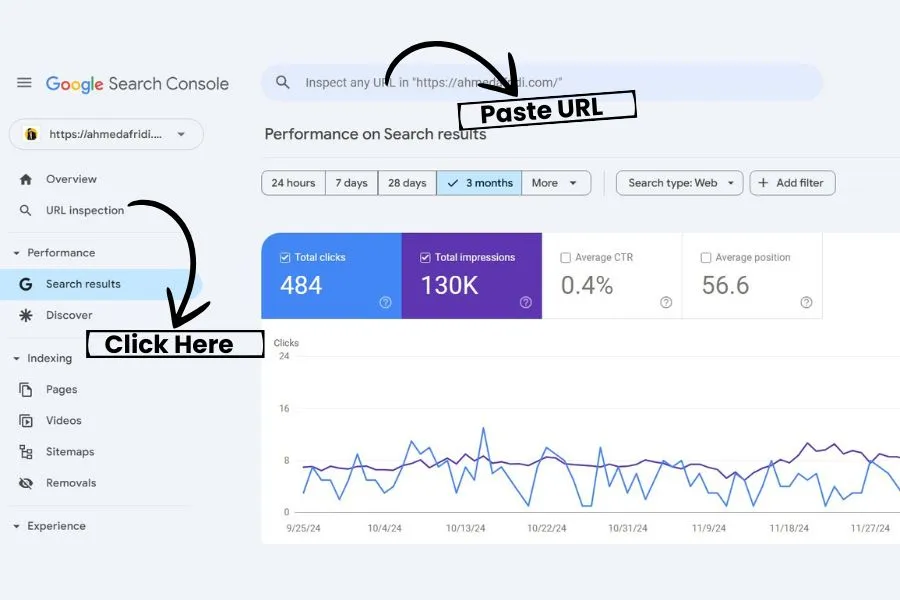

The URL inspection tool is best features of Google Search Console (GSC). It allows you to check the indexing status of any specific URL on your site.

How to Use the URL Inspection Tool

- Access Google Search Console: Open Google Search Console.

- Select Your Property: Choose the website you want to inspect.

- Enter the URL: Paste the URL you want to check in the inspection tool provided at the top of the GSC dashboard.

- Analyze the Results: After the inspection, you’ll see whether the URL is indexed or not. You’ll also get insights into any issues affecting the URL’s performance.

Example: If you inspect the URL, the tool will indicate if it’s “Crawled,” “Indexed,” or “Not Indexed” and provide reasons if it’s not indexed.

How to Check URLs Indexing Status

To check the indexing status of your URLs effectively:

- Use the URL Inspection Tool as described above.

- Regularly monitor the Coverage report in GSC to view any URLs that are excluded from indexing. This report will detail issues like ‘Crawled but currently not indexed’ or ‘Discovered but not indexed’.

Crawl Errors and Their Solutions

Crawl errors occur when Google tries to access your site’s pages. These can significantly affect your site’s indexing status.

Common Crawl Errors

- 404 Errors (Not Found): The server cannot find the requested URL.

- 500 Errors (Server Errors): The server encountered an unexpected condition.

- Redirect Errors: Issues with redirects can prevent proper indexing.

Solutions to Crawl Errors

- Fix Broken Links: Use the Coverage report to identify 404 errors and update or remove broken links.

- Check Server Health: Ensure your server is running smoothly to avoid 500 errors.

- Review Redirects: Make sure your redirects are correctly set up and not causing loops.

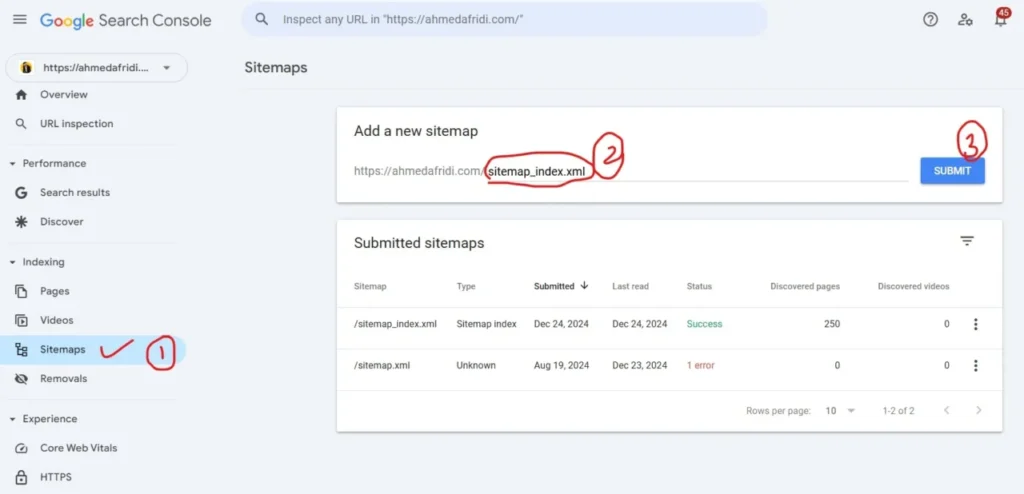

Generating and Submitting a Sitemap

A sitemap is a file that provides search engines with a roadmap of your site’s content. Submitting a sitemap helps Google discover and index your pages more efficiently.

How to Generate a Sitemap

- Use a Sitemap Generator: Tools like XML-sitemaps.com can help you create a sitemap.

- Manual Creation: If you prefer, you can manually create a sitemap in XML format.

Submitting Your Sitemap

- Go to the Sitemaps section.

- Paste the last part of the URL like (sitemap.xml) in the section.

- Click “Submit” and monitor the status for any issues.

Generating and Submitting a robots.txt File

The robots.txt file is a crucial component for controlling how search engines crawl your site. It tells search engines which pages to crawl and which to ignore.

How to Create a robots.txt File

- Open a text editor and create a new file named robots.txt.

- Specify the user-agent and directives based on your preferences.

Example:

javascript

User-agent: *

Disallow: /private/

Allow: /

Submitting your robots.txt File

- Place the robots.txt file in the root directory of your website (e.g., https://yourwebsite.com/robots.txt).

- Use the robots.txt Tester in GSC to ensure it’s correctly set up.

To access Robots.txt. on Google Search Console Click Here.

Conclusion

Mastering Google Search Console indexing is essential for any digital marketer looking to enhance their website’s visibility. By utilizing the URL inspection tool, checking indexing status, addressing crawl errors, generating and submitting sitemaps, and managing your robots.txt file, you can improve your site’s performance in search results. Remember, the digital landscape is competitive, and staying on top of these elements can give you a significant edge.

FAQs

1. What is the difference between crawling and indexing?

Crawling is when Google scans your site; indexing is when it stores your pages in its database.

2. How often should I check my Google Search Console for updates?

At least once a week to catch and resolve issues quickly.

3. Can I manually request Google to index a page?

Yes, use the URL inspection tool and click “Request Indexing.”

4. What tools can I use to create a sitemap?

Try Yoast SEO, Screaming Frog, or online generators like XML-sitemaps.com.

5. How much time take Google to index a new page?

It depends on your daily update of content on your site. It can be a few hours to several days.